The conversations are interesting and varied because they’re about new, exciting, different things. After all, that’s what tech innovation is all about. But there are some common elements too. One common element – and one that’s pretty surprising – is that often, no one has asked these innovators even the most basic technical questions.

Even when they have talked to multiple developers or development firms, we’re often the first to ask basic questions like “Who are your customers?” or “Are you developing for desktop, tablet, mobile, or all three?”

Of course, it’s more complicated than just checking boxes on a question list. The innovator/developer relationship needs to be a conversation. Still, if you’re a business leader and your developers haven’t asked you these questions, look for a Fractional CTO to help navigate the critical early stage of development.

Background Questions

Let’s start with some background questions about the business and product. Think of these as the big upfront questions a developer should ask to get an overall picture.

- Who are the customers? What’s their specific need/pain? Can you provide specific examples of different types of customers, what they need, and what the system will do for them?

- Tell me about the business. How are you funding this? What level of funding do you currently have? Who’s helping you with fundraising? Do you have legal (Founder Agreement, IP, etc.) in place?

- What are your big milestones? Do you have any deals done or in progress that are tied to those milestones? Where are you today, and what’s happening right now?

- What’s been done so far to validate the concept?

- Who are the other stakeholders involved? Are there other founders, business leaders, partners, or administrators?

- How will you be taking this to market? What channels will you use (e.g., Ads, Viral/Social, SEO)? Is anyone working with you on this?

- What are your key Startup Metrics? How do you make your money? How do you measure success?

- Who are your big competitors? What are some sites or companies in the same space? How will you differentiate from these?

- What is different, special here? Where’s the mystery? Do you have a custom algorithm or other technology?

- What special data, content, APIs, etc., will you leverage? What’s the state of the relationships that brings you that data? What’s the state of those systems?

- Where do you stand on your brand? Do you have a name, a logo, and have you thought about brand positioning? What are some examples of similar brands?

- Are there any specific hard dates or important time-sensitive opportunities?

- What do you see as your biggest risks and challenges?

- What are the key features in each major phase of your application? What functionality would make your company launch-ready?

- What has been captured so far? Are there user stories? Mock-ups? Wireframes? Comps?

- What problem is your product trying to solve?

- If you launched tomorrow, how many users would you forecast? Six months from now? A year from now? How quickly will we need to scale the application?

Questions Developers May Have Forgot to Ask

Here are some additional questions that might have slipped your developers’ minds.

- eCommerce

- Targets

- Registration

- Artificial Intelligence

- Member Profiles

- Social Integration/Viral Outreach

- Communication/Forums

- Social Interaction

- Internationalization/Localization

- Location/Geography

- Gamification/Scoring

- Video and Audio

- Notifications

- Email / SMS

- AI Assisted Development

- Marketing Support

- SEO Support

- Content Management

- Dates and Time Zones

- Search

- Logging/Auditing

- Analytics/Metrics

- Administration

- Reporting

- Accounting

- Customer Support

- Security

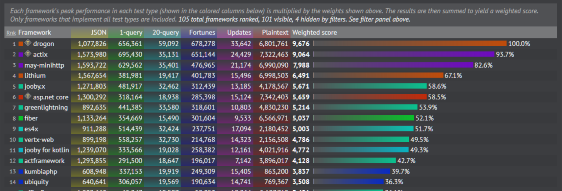

- Performance

- Integration Points

- Existing Capabilities

- Hosting

- Platform

- Team and Process

- Product Management

- Compliance

- Expansion

Does your startup run on a subscription model? How many kinds of subscriptions do you support? What are the rules for subscriptions? Do you support discounts? Free trials? Bundling? Coupons?

Often this ties to marketing support. For example, you might want to offer a discount to a given group to provide incentive.

Are you developing a native app and/or a web app? Are you targeting desktop, tablet, or mobile? Can you do a hybrid web/native application? Which devices will you test on specifically? Most new sites need to account for mobile delivery – but on the other hand, not every MVP needs both desktop and mobile versions.

Do you plan to support Google Sign-In, Facebook Connect, or similar 3rd-party authentication? If so, will you also have your own account system? Will you validate new members’ email addresses and/or phone numbers?

Does your application leverage AI in any way? For customer service? To personalize customer recommendations? How can we use AI to improve the customer experience?

What data is included? Is there a step-by-step wizard? Can members upload their pictures? How much member profile information do you need before allowing a user to register?

Is your application tied into any social networks? How tight is that integration? Is it limited to login and Like buttons, or are you building a presence within the social networks themselves? What about other kinds of viral outreach?

Are there discussion forums? Commenting? Messaging? If you have boards or comments, do you support flagging? Moderation?

Do users/members relate to one another? If so, how do they interact? Are users otherwise grouped by the system, maybe by background (employer, university) or preferences?

Do you anticipate an international audience? How important is support for multiple languages? For multiple character sets? How do we prioritize internationalization versus getting something to market?

Is your application location-aware? Does it tap into geolocation services provided by the browser or rely on a third-party lookup table? How are you using geographic information? How does the application behave when location data is not available?

Does your application include any kind of scoring and/or gamification? Are there achievements and badges? Is there a leaderboard for users or teams?

Are you hosting your own video, or can we use a third-party host like YouTube or Vimeo? Do you need to process user-contributed media? What about reporting and moderation?

What notifications does your application need? Are they dismissable? Do they generate emails or push notifications?

Does your application send out transactional emails or SMS messages? In mass? How are those mass messages crafted? How often is message content updated? Do you need to track views and bounces? What are your privacy rules?

How can we use AI to speed up our SLDC? How can we leverage AI to get our product to market faster?

What does your application need to do to help with marketing? Do you have specific landing pages? What are your referral sources, and what tracking do you need around these sources? Do you rely on affiliates? Is there a need for A/B testing?

Will URLs and page content need to be properly formed for SEO? What back-end support for SEO is needed?

How often will the application’s content need to change? Who will be doing the changes? Will you need to add arbitrary new pages? Should content changes be scheduled? Are members contributing content or only system administrators?

Does the application need to support multiple time zones? Does it need to convert dates automatically?

Does the application include search? What content is searchable? How advanced does it need to be?

What key operations need to be logged for auditing? What needs to be logged for customer support?

What key startup metrics will you need to track? What metrics will you need for future funding rounds or operations?

What will you need to do from a back-end? Administer users? Send messages?

What needs to be reported? Are CSV/Excel exports sufficient, or do you need something more? Reporting can be endless! Our advice: keep it small to start.

Beyond reviewing transactions, what accounting support do you need? Do you need to track inventory? Fulfillment?

Do you need specific interfaces and support for customer service? Do you need a ticket system? What about an AI support assistant?

What are the business / application’s specific security risks? Does it need to throttle potential malicious activity? This generally is a significant discussion itself! Our advice: be pragmatic!

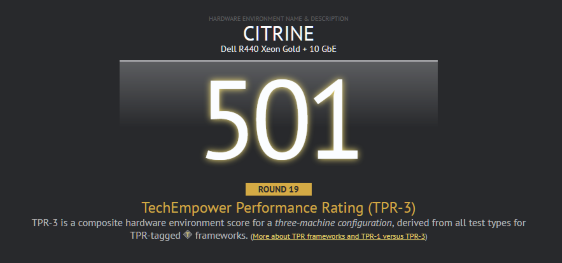

What is the expected request volume? What response time characteristics are required? How complex is the application? Complexity can directly impact performance.

What third-party systems will we need to integrate with? How far along are any integration efforts? What is the business relationship with the third parties? Who controls access to the third-party accounts, if any?

What capabilities and personnel do you already have access to? Graphic design? UI/UX design? A Product Manager? How much availability do they have to work on this effort?

What hosting requirements does your application have? Do you have any existing hosting relationships?

Are there pre-existing technical platform decisions that must be considered?

Are you using, or planning to use any software development methodologies? How big is the anticipated development team? How will it be structured?

Do you have a clear vision of the initial application and a plan for sequencing changes after the initial launch? Do you have the internal staff to manage changes?

What regulatory compliance do you need to support? GDPR? CCPA? HIPPA?

What is your vision for the expansion of the application? What features should be in place at launch? Six months from now? A year from now?