This project measures the high-water mark performance of server side web application frameworks and platforms using predominantly community-contributed test implementations. Since its inception as an open source project in 2013, community contributions have been numerous and continuous. Today, at the launch of Round 19, the project has processed more than 4,600 pull requests!

We can also measure the breadth of the project using time. We continuously run the benchmark suite, and each full run now takes approximately 111 hours (4.6 days) to execute the current suite of 2,625 tests. And that number continues to steadily grow, as we receive further test implementations

Composite scores and TPR

Round 19 introduces two new features in the results web site: Composite scores and a hardware environment score we’re calling the TechEmpower Performance Rating (TPR). Both are available on the Composite scores tab for Rounds 19 and beyond.

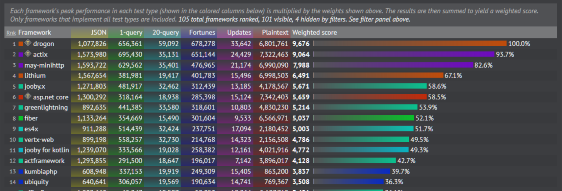

Composite scores

Frameworks for which we have full test coverage will now have composite scores, which reflect an overall performance score across the project’s test types: JSON serialization, Single-query, Multi-query, Updates, Fortunes, and Plaintext. For each round, we normalize results for each test type and then apply subjective weights for each (e.g., we have given Fortunes a higher weight than Plaintext because Fortunes is a more realistic test type).

When additional test types are added, frameworks will need to include implementations of these test types to be included in the composite score chart.

You can read more about composite scores at the GitHub wiki.

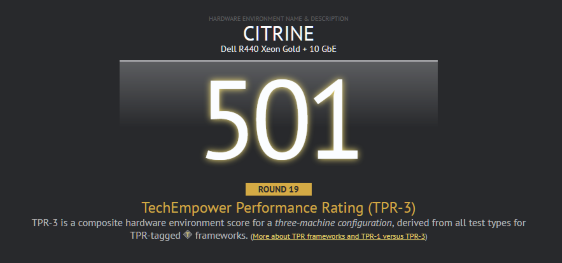

TechEmpower Performance Rating (TPR)

With the composite scores described above, we are now able to use web application frameworks to measure the performance of hardware environments. This is an exploration of a new use-case for this project that is unrelated to the original goal of improving software performance. We believe this could be an interesting measure of hardware environment performance because it’s a holistic test of compute and network capacity, and based on a wide spectrum of software platforms and frameworks used in the creation of real-world applications. We look forward to your feedback on this feature.

Right now, the only hardware environments being measured are our Citrine physical hardware environment and Azure D3v2 instances. However, we are implementing a means for users to contribute and visualize results from other hardware environments for comparison.

Hardware performance measurements must use the specific commit for a round (such as 801ee924 for Round 19) to be comparable, since the test implementations continue to evolve over time.

Because a hardware performance measurement shouldn’t take 4.6 days to complete, we use a subset of the project’s immense number of frameworks when measuring hardware performance. We’ve selected and flagged frameworks that represent the project’s diversity of technology platforms. Any results files that include this subset can be used for measuring hardware environment performance.

The set of TPR-flagged frameworks will evolve over time, especially if we receive further input from the community. Our goal is to constrain a run intended for hardware performance measurement to several hours of execution time rather than several days. As a result, we want to keep the total number of flagged frameworks somewhere between 15 to 25.

You can read more about TPR at the GitHub wiki.

Other Round 19 Updates

Once again, Nate Brady tracked interesting changes since the previous round at the GitHub repository for the project. In summary:

- Updated database platforms: MySQL to v8.0, MongoDB to v4.2, and Postgres to v12.

- Additional database test verifications to ensure implementations are valid.

- Migrated the Citrine hardware environment from CentOS to Ubuntu 18.04.

- Migrated the Azure cloud environment from Ubuntu 16.04 to 18.04 and modified its Terraform script to select a commit recently used in Citrine for easier comparisons.

- Consolidated test requirements on GitHub.

- Clarification of requirements for the World objects used in database tests.

- Load generator update.

Notes

Thanks again to contributors and fans of this project! As always, we really appreciate your continued interest, feedback, and patience!

Round 19 is composed of:

- Run 9a267248-17b0-4080-ac45-a50d25b4fc2a from Azure.

- Run 4c536195-90ff-40b8-8636-a719318a864b from Citrine.